INTRODUCTION:

One of the most common attack vectors is user (or other) input into a system. It’s very risky to assume that input is well-formed, yet people still do, and it is still a common attack vector. Security vulnerabilities remain “merely” defects in the code unless the conditions required to trigger the error are present, so the key to a successful attack is to create such conditions. User input (via UI, terminal access, or other input) is a common way to do this. Tracing the data flow from source to destination (sink) is a key capability of CodeSonar, using its tainted data analysis.

Related:

- Protecting Against Tainted Data in Embedded Applications with Static Analysis

- Performing a Security Audit with CodeSonar (Video)

- GrammaTech Unveils Visual Security Analysis for Embedded Software

What is Tainted Data?

Any unchecked and un-sanitized input into a device is considered tainted — security best practices dictate that all input should be untrusted that comes from outside the limits of the system. No assumptions can be made about the correctness of this data when designing and implementing the system. SQL injection attacks, using malformed input on websites, are a good example of the risk. This input, unchecked, can cause the arbitrary execution of SQL within the system, causing data exposure and/or corruption of the database. Embedded systems are not immune to this kind of problem even if user input or UI isn’t provided.

Sources of tainted data include all kinds of external input into the system, such as:

- Environment variables

- File contents

- File metadata, such as a file’s permissions or datestamps

- The network

- Network services, such as the results of a DNS query

- The system clock

The location where tainted data is used unchecked is referred to as the tainted data sink, which could be a well-known dangerous operation like strcpy(). Once an input has been properly checked, it is considered cleansed and no longer tainted.

Turning a Vulnerability into an Attack with Tainted Data

A vulnerability is a software bug that has the potential to crash a system, expose data, execute injected code, or open the door to other unwanted outcomes. Vulnerabilities become serious security threats when there is a path to exploit it from the attack surface of the device (i.e. a tainted data source). Below is a straightforward example that illustrates how reading system environment variables can be risky:

void config(void)

{char buf[100];

int count;

…strcpy(buf, getenv(“CONFIG”));

…}

In this example, input from outside the system is made with getenv() to retrieve the contents of the environment variable CONFIG. This seems innocuous at first, since the assumption is that any reasonable environment variable would be less than one hundred characters, right? Wrong. Creating a malformed input in this case could have disastrous effects — from crashing the system to arbitrary code execution due to a buffer overflow in strcpy(). The tainted data source in this case is the getenv() call and the sink is the strcpy() function. Now, this is a simple example. In more complex cases, the source and sink can be in different source files with complex inter-procedural dataflow between them.

A dataflow from tainted data source to vulnerability (sink) is a serious security threat and underlies the need for dataflow analysis as part of a security static analysis tool. Finding these dataflows manually is very time consuming, so an automated approach is needed.

Automated Tainted Data Analysis

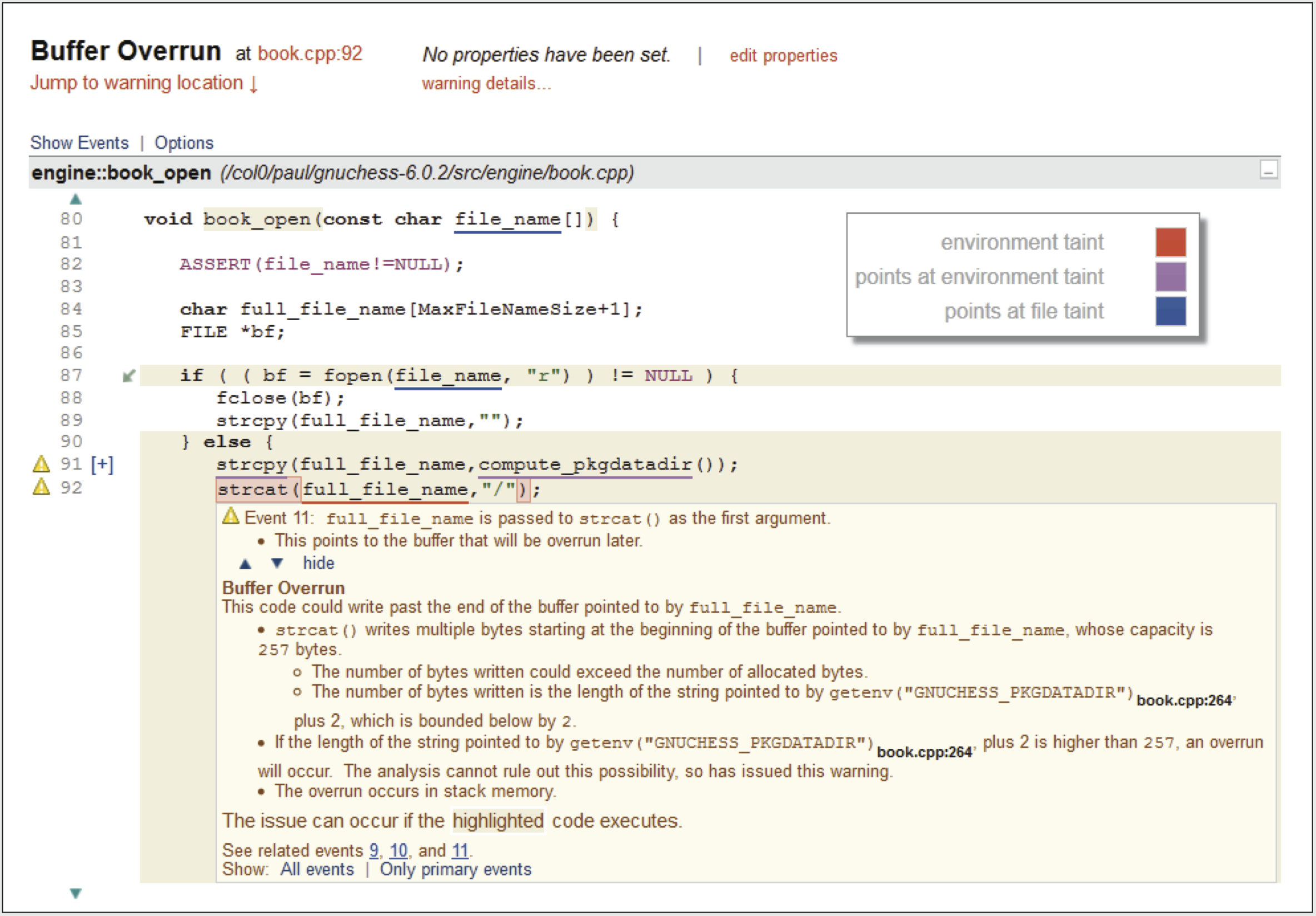

Tainted data dataflow is discovered via internal representations of the code made during static analysis. Advanced tools like CodeSonar create internal models of the code that describe syntax, control-flow, and dataflow; checkers are created that make use of these representations. A checker that detects a buffer overflow is augmented with analysis of data sources to see if there is a connection to system inputs. Discovering this connection means this overflow is not just a serious error, but a potential security vulnerability too. An example of such a report from CodeSonar is below, showing how it indicates sources of tainted data:

Figure 1: A buffer overrun warning where the underlining shows the effect of tainted data.

Software applications are complex and the data and control flow is equally complex and hard to analyze without visualization tools. Tracing tainted data sources to sinks is an import security audit technique that greatly reduces the risk of vulnerabilities. GrammaTech CodeSonar provides complete call and data graph analysis and highlights tainted data source and sinks as illustrated below:

Figure 2: A top-down view of the call graph of a program showing modules according to the physical layout of code in files and directories. The red coloration shows the modules with the most tainted data sources, and the blue “glow” shows modules with tainted data sinks.

CONCLUSION:

Assuming system inputs are well-formed and reasonable is dangerous, and when paired with vulnerable code, can lead to system crashes, data exposure, and code injection/execution. The automated tainted dataflow analysis and guidance that CodeSonar provides is essential to discovering these serious vulnerabilities and fixing them efficiently.